What’s PCI-X?

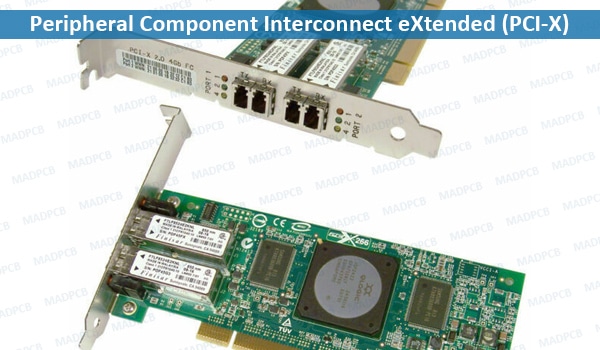

Peripheral Component Interconnect eXtended (PCI-X) is a computer bus and expansion card standard that enhances the 32-bit PCI local bus for higher bandwidth demanded mostly by servers and workstation. It uses a modified protocol to support higher clock speeds (up to 133 MHz), but is otherwise similar in electrical implementation. PCI-X 2.0 speeds up to 533MHz, with a reduction in electrical levels. Printed circuit boards (PCBs) are connected directly to a motherboard (mainboard) using PC or PCI slot connectors.

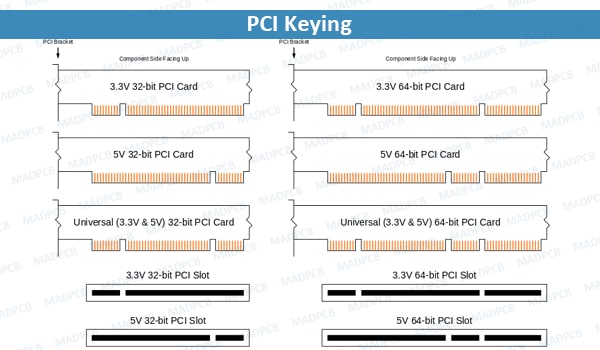

The slot is physically a 3.3V PCI slot, with exactly the same size, location and pin assignments. The electrical specifications are compatible, but stricter. However, while most conventional PCI slots are the 85mm long 32-bit version, most PCI-X devices use the 130mm long 64-bit slot, to the point that 64-bit PCI connectors and PCI-X supports are seen as synonymous.

PCI-X is in fact fully specified for both 32-bit and 64-bit PCI connectors, and PCI-X 2.0 added a 16-bit variant for embedded applications. It has been replaced in modern designs by the similar-sounding PCI Express (officially abbreviated as PCIe), with a completely different physical connector and a very different electrical design, having one or more narrow but fast serial connection lanes instead of a number of slower connections in parallel.

Background and Motivation

In PCI, a transaction that cannot be completed immediately is postponed by either the target or the initiator issuing retry-cycles, during which no other agents can use the PCI bus. Since PCI lacks a split-response mechanism to permit the target to return data at a later time, the bus remains occupied by the target issuing retry-cycles until the read data is ready. In PCI-X, after the master issues the request, it disconnects from the PCI bus, allowing other agents to use the bus. The split-response containing the requested data is generated only when the target is ready to return all of the requested data. Split-responses increase bus efficiency by eliminating retry-cycles, during which no data can be transferred across the bus.

PCI also suffered from the relative scarcity of unique interrupt lines. With only 4 interrupt lines (INTA/B/C/D), systems with many PCI devices require multiple functions to share an interrupt line, complicating host-side interrupt-handling. PCI-X added Message Signaled Interrupts, an interrupt system using writes to host-memory. In MSI-mode, the function’s interrupt is not signaled by asserting an INTx line. Instead, the function performs a memory-write to a system-configured region in host-memory. Since the content and address are configured on a per-function basis, MSI-mode interrupts are dedicated instead of shared. A PCI-X system allows both MSI-mode interrupts and legacy INTx interrupts to be used simultaneously (though not by the same function.)

The lack of registered I/Os limited PCI to a maximum frequency of 66 MHz. PCI-X I/Os are registered to the PCI clock, usually through means of a PLL to actively control I/O delay the bus pins. The improvement in setup time allows an increase in frequency to 133 MHz.

Some devices, most notably Gigabit Ethernet cards, SCSI controllers (Fibre Channel and Ultra320), and cluster interconnects could by themselves saturate the PCI bus’s 133 MB/s bandwidth. Ports using a bus speed doubled to 66 MHz and a bus width doubled to 64 bits (with the pin count increased to 184 from 124), in combination or not, have been implemented. These extensions were loosely supported as optional parts of the PCI 2.x standards, but device compatibility beyond the basic 133 MB/s continued to be difficult.

Developers eventually used the combined 64-bit and 66-MHz extension as a foundation, and, anticipating future needs, established 66-MHz and 133-MHz variants with a maximum bandwidth of 532 MB/s and 1064 MB/s respectively. The joint result was submitted as PCI-X to the PCI Special Interest Group (Special Interest Group of the Association for Computing Machinery). Subsequent approval made it an open standard adoptable by all computer developers. The PCI SIG controls technical support, training, and compliance testing for PCI-X. IBM, Intel, Microelectronics, and Mylex were to develop supporting chipsets. 3Com and Adaptec were to develop compatible peripherals. To accelerate PCI-X adoption by the industry, Compaq offered PCI-X development tools at their Web site.

PCI-X 1.0

The PCI-X standard was developed jointly by IBM, HP, and Compaq and submitted for approval in 1998. It was an effort to codify proprietary server extensions to the PCI local bus to address several shortcomings in PCI, and increase performance of high bandwidth devices, such as Gigabit Ethernet, Fibre Channel, and Ultra3 SCSI cards, and allow processors to be interconnected in clusters.

Intel gave only a qualified welcome to PCI-X, stressing that the next generation bus would have to be a “fundamentally new architecture”. Without Intel’s support, PCI-X failed to be adopted in PCs. The PCI-X interface was however briefly adopted by Apple, for the first few generations of the Power Macintosh G5.

The first PCI-X products were manufactured in 1998, such as the Adaptec AHA-3950U2B dual Ultra2 Wide SCSI controller, however at that point the PCI-X connector was merely referred to as “64-bit ready PCI” on packaging, hinting at future forward compatibility. Actual PCI-X branding only became standard later, likely coinciding with widespread availability of PCI-X equipped motherboards. When more details of PCI Express were released in August 2001, PCI SIG chairman Roger Tipley expressed his belief that “PCI-X is going to be in servers forever because it serves a certain level of functionality, and it may not be compelling to switch to 3GIO (PCI Express) for that functionality. We learned that from not being able to get rid of ISA. ISA hung around because of all of these systems that weren’t high-volume parts.” Tipley also announced that (at the time) the PCI SIG was planning to fold PCI Express and PCI-X 2.0 into a single work tentatively called PCI 3.0, but that name was eventually used for a relatively minor revision of conventional PCI.

PCI-X 2.0

In 2003, the PCI SIG ratified PCI-X 2.0. It adds 266-MHz and 533-MHz variants, yielding roughly 2,132 MB/s and 4,266 MB/s throughput, respectively. PCI-X 2.0 makes additional protocol revisions that are designed to help system reliability and add Error-correcting codes to the bus to avoid re-sends. To deal with one of the most common complaints of the PCI-X form factor, the 184-pin connector, 16-bit ports were developed to allow PCI-X to be used in devices with tight space constraints. Similar to PCI-Express, PtP functions were added to allow for devices on the bus to talk to each other without burdening the CPU or bus controller.

Despite the various theoretical advantages and its backward compatibility with PCI-X and PCI devices, it has not been implemented on a large scale (as of 2008). This lack of implementation primarily is because hardware vendors have chosen to integrate PCI Express instead.

IBM was one of the (few) vendors which provided PCI-X 2.0 (266 MHz) support in their System i5 Model 515, 520 and 525; IBM advertised these slots as suitable for 10 Gigabit Ethernet adapters, which they also provided. HP offered PCI-X 2.0 in some ProLiant servers and offered dual-port 4Gbit/s Fibre Channel adapters, also operating at 266 MHz. AMD supported PCI-X 2.0 (266 MHz) via its 8132 Hypertransport to PCI-X 2.0 tunnel chip. ServerWorks was a vocal supporter of PCI-X 2.0 (to the detriment of the first generation PCI Express) particularly through its chief Raju Vegesna, who was however fired soon thereafter for roadmap disagreements with the Broadcom leadership.

In 2003, Dell announced it would skip PCI-X 2.0 in favor of more rapid adoption of PCI Express solutions. As reported by PC Magazine, Intel began to sideline in their 2004 roadmap, in favor of PCI Express, arguing that the latter had substantial advantages in terms of system latency and power consumption, more dramatically stated as avoiding “the 1,000-pin apocalypse” for their Tumwater chipset.

Technical Description

PCI-X revised the conventional PCI standard by doubling the maximum clock speed (from 66 MHz to 133 MHz) and hence the amount of data exchanged between the computer processor and peripherals. Conventional PCI supports up to 64 bits at 66 MHz (though anything above 32 bits at 33 MHz is seen only in high-end systems). The theoretical maximum amount of data exchanged between the processor and peripherals with PCI-X is 1.06 GB/s, compared to 133 MB/s with standard PCI. PCI-X also improves the fault tolerance of PCI, allowing, for example, faulty cards to be reinitialized or taken offline.

PCI-X is backward compatible to PCI in the sense that the entire bus falls back to PCI if any card on the bus does not support PCI-X.

The two most fundamental changes are:

The shortest time between a signal appearing on the PCI bus and a response to that signal occurring on the bus has been extended to 2 cycles, rather than 1. This allows much faster clock rates, but causes many protocol changes:

- The ability of the conventional PCI bus protocol to insert wait states on any cycle based on the IRDY# and TRDY# signals has been deleted; PCI-X only allows bursts to be interrupted at 128-byte boundaries.

- The initiator must deasser FRAME# two cycles before the end of the transaction.

- The initiator may not insert wait states. The target may, but only before any data is transferred, and wait states for writes are limited to multiples of 2 clock cycles.

- Likewise, the length of a burst is decided before it begins; it may not be halted on an arbitrary cycle using the FRAME# and STOP# signals.

- Subtractive decode DEVSEL# takes place two cycles after the “slow DEVSEL#” cycle rather than on the next cycle.

After the address phase (and before any device has responded with DEVSEL#), there is an additional 1-cycle “attribute phase”, during which 36 additional bits (both AD and C/BE# lines are used) of information about the operation are transmitted. These include 16 bits of requester identification (PCI bus, device and function number), 12 bits of burst length, 5 bits of tag (for associating split transactions), and 3 bits of additional status.

Versions

Essentially all PCI-X cards or slots have a 64-bit implementation and vary as follows:

Cards:

- 66 MHz (added in Rev. 1.0)

- 100 MHz (implemented by a 133 MHz adapter on some servers)

- 133 MHz (added in Rev. 1.0)

- 266 MHz (added in Rev. 2.0)

- 533 MHz (added in Rev. 2.0)

Slots:

- 66 MHz (can be found on older servers)

- 133 MHz (most common on modern servers)

- 266 MHz (rare, being replaced by PCI-e)

- 533 MHz (rare, being replaced by PCI-e)

Mixing of 32-bit and 64-bit PCI Cards in Different Width Slots

Most 32-bit PCI cards will function properly in 64-bit PCI-X slots, but the bus speed will be limited to the clock frequency of the slowest card, an inherent limitation of PCI’s shared bus topology. For example, when a PCI 2.3 66-MHz card is installed into a PCI-X bus capable of 133 MHz, the entire bus backplane will be limited to 66 MHz. To get around this limitation, many motherboards have multiple PCI/PCI-X buses, with one bus intended for use with high-speed PCI-X peripherals, and the other bus intended for general-purpose peripherals.

Many 64-bit PCI-X cards are designed to work in 32-bit mode if inserted in shorter 32-bit connectors, with some loss of speed. An example of this is the Adaptec 29160 64-bit SCSI interface card. However some 64-bit cards do not work in standard 32-bit PCI slots. Even if it would work, installing a 64-bit card in a 32-bit slot will leave the 64-bit portion of the card edge connector not connected and overhanging, which requires that there be no motherboard components positioned so as to mechanically obstruct the overhanging portion of the card edge connector.

PCI-X vs PCI-E

PCI-X is often confused by name with similar-sounding PCI Express, commonly abbreviated as PCI-E or PCIe, although the cards themselves are totally incompatible and look different. While they are both high-speed computer buses for internal peripherals, they differ in many ways. The first is that PCI-X is a 64-bit parallel interface that is backward compatible with 32-bit PCI devices. PCIe is a serial point-to-point connection with a different physical interface that was designed to supersede both PCI and PCI-X.

PCI-X and standard PCI buses may run on a PCIe bridge, similar to the way ISA buses ran on standard PCI buses in some computers. PCIe also matches PCI-X and even PCI-X 2.0 in maximum bandwidth. PCIe 1.0 x1 offers 250 MB/s in each direction (lane), and up to 16 lanes (x16) are currently supported each direction, in full-duplex, giving a maximum of 4 GB/s bandwidth in each direction. PCI-X 2.0 offers (at its maximum 64-bit 533-MHz variant) a maximum bandwidth of 4,266 MB/s (~4.3 GB/s), although only in half-duplex.

PCI-X has technological and economical disadvantages compared to PCI Express. The 64-bit parallel interface requires difficult trace routing, because, as with all parallel interfaces, the signals from the bus must arrive simultaneously or within a very short window, and noise from adjacent slots may cause interference. The serial interface of PCIe suffers fewer such problems and therefore does not require such complex and expensive designs. PCI-X buses, like standard PCI, are half-duplex bidirectional, whereas PCIe buses are full-duplex bidirectional. PCI-X buses run only as fast as the slowest device, whereas PCIe devices are able to independently negotiate the bus speed. Also, PCI-X slots are longer than PCIe 1x through PCIe 16x, which makes it impossible to make short cards for PCI-X. PCI-X slots take quite a bit of space on motherboards, which can be a problem for ATX and smaller form factors.